1. Why is it difficult to test OSB's components?

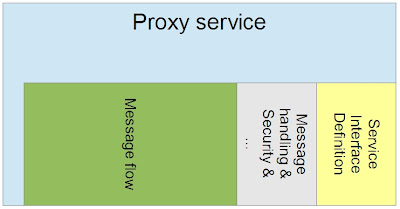

Testing the components in OSB is not the same task as testing the components of a Java or other programming language. The main difference is that a Java component can be tested by itself but an OSB component usually can't. We need the entire environment (the backend systems / services used by the Service Bus) if we want to test an OSB component.The other problem that OSB hasn't got any built-in test ability. Our option for testing is to send requests to the proxy service and examine the responses i.e. considering the OSB as a black box.

It is possible to test some component without using the OSB server (e.g. XQuery transformation). Using these components is strongly recommended (sometimes we have got options what type of elements can we use in the proxy service) as we can get rid of many possible future problems by testing these parts of the OSB code in advance.

So we have two main tasks if we want to build a test environment for Oracle Service Bus:

- building (mocking) the environment of OSB

- working out how to test the components

2. Mocking the world

It is vital to build the same environment for testing as it exists in the production environment. However we can easily realise that this requirement can be guaranteed for our components but it cannot for the backend systems which can be found on client site (just think about the really huge systems like SAP).We need these systems for executing our tests. More precisely we need an endpoint which is exactly the same as in the real system (in order to send our request) and this endpoint should send us back a response (in case of synchronous opeartion). The solution is to simulate the systems.

Simulating a system is not so complicated in case of webservices. There are many tools that can be used (I prefer soapUI). If the protocol isn't SOAP then it is more difficult to mock the systems. Sometimes it is not possible to find a proper tool for this work so in this case we have to build a temporary solution (e.g. building a fake interface in Java or in other programming language and deploying it onto an application server if necessary).

It can be seen on the diagram above that we have to group the services in the "mocked world" in a different way than in the real world. It simple means that we will mock all the webserivces with one tool, all the HTTP over XML services with an other tool (or the same) and so on.

I try to give you some idea how to simulate the different kind of services (if someone has got an other idea please let me know):

- SOAP based webservices

soapUI - www.soapui.org

- REST

soaprest-mocker - http://sourceforge.net/p/soaprest-mocker/wiki/Home/

restito - https://github.com/mkotsur/restito

- DB call

It depends on whether a DB Adapter is used or the built-in OSB function fn-bea:execute-sql. On the second case we have to use a database to execute this function but it doesn't have to be the real database!

- RMI (EJB)

probably the best thing is to write an EJB with exactly the same siganture but with different body (that doesn't contain the business logic but a simple logic sending back the response)

- HTTP over XML

soapUI

- CORBA

CorbaMock - sourceforge.net/projects/corba-mock/

As I mentioned earlier the OSB doen't have a built-in testing ability so it can be tested by sending it requests and examining the received responses. It means that we have to build a lot of testing scenario and a lot of requests for these scenarios. However if we imagine our mocked services as static endpoints then we should create many responses for each mocked backend systems too! So it is crucial to use a tool which is capable of building dynamic responses based on the received requets (e.g. to copy some field from the request to the response, to put timestamps / random values / ... in the responseand so on).

I prefer using soapUI as it can be used for any tasks that can occur at testing or mocking webservice-based services. It is capable of running load tests, mocking services (e.g. building dynamic responses based on the received requests), creating unit tests and so on.

I am afraid that testing is a too big topic to pack into one blog entry so

To Be Continued...